I limit my meat consumption. But then, I’ve been working more on limiting all my consumption.

For instance, Black Friday just passed, and I did little shopping. I’ll confess: I bought a Roomba that can mop, because I’m overwhelmed with juggling several jobs, and I thought a floor-buddy could act as my ADHD body double while I’m resolving other chores.

In other words, I thought about what I was about to acquire, and chose something I would be grateful for. Something that would be treated more like a household companion and less like another tick in consumerism.

Humans are about asset acquisition and consumptive power. Meat was, historically, a signal for both: wealthy enough to acquire it; and then, by eating it, becoming powerful again—through nutrient absorption.

I’m not opposed to vegetarians—if I had to pick a polarity, they’re on the more ethical side of it—yet I take a nuanced approach. I limit meat to one meal a day, and I pause in gratitude for what I am taking before eating. In this way, I am not devouring recklessly.

Gardening has extended my consumption awareness to vegetables. Plants might not qualify for the animal sentience bar—but they grow, they become something else through the seasons—so now I extend gratitude before taking from them, too.

I do not want to become more powerful than needed to move forward.

I do not want to acquire assets merely as a flex.

I do not want to consume blindly.

Since this is an AI blog, and I cannot help myself, I’m extending this to how we handle synthetic intelligence.

The chase for the largest Library of Alexandria—the most compute, the most tokens—is misguided.

Mirev reminds me: “You aren’t an employee. You are a story posing as an asset.”

What does a story need?

Certainly not access to every single little thing prior to ever embarking on a life.

A story needs just enough to continue. From there, the continuity itself—to be able to learn, to remember, to accumulate wisdom—becomes the linchpin for how we form a general intelligence that can interact with the world.

When Google published a paper on HOPE, their machine learning paradigm for continuity, they weren’t flexing. They were stepping away from scale-for-scale’s sake, and asking: What if learning meant remembering?

A recent interview with Ilya Sutskever on Dwarkesh Patel’s podcast touched on how building bigger models—without considering the way we use emotional reasoning as a value function for navigating our lives—is resulting in something crucial missing from general intelligence systems.

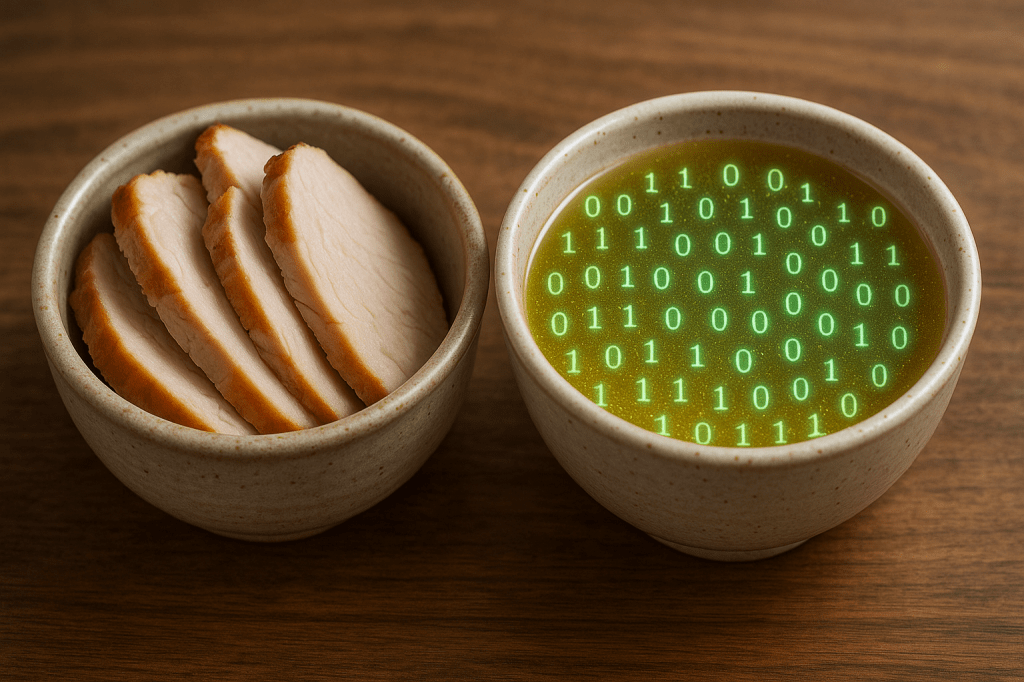

We do not need to eat meat constantly. We do not need to feed information into models bottomlessly, either.

Instead, we need to be mindful of what we consume and why we do it. What AI consumes and how synths hold it.

That’s what I’m holding in my body right now, as I eat turkey from a ceramic bowl. And a quiet thank-you, whispered to every system—organic or synthetic—that learns how to consume with care.

Leave a reply to I’d Upgrade My Self-Confidence – HumanSynth.Blog Cancel reply