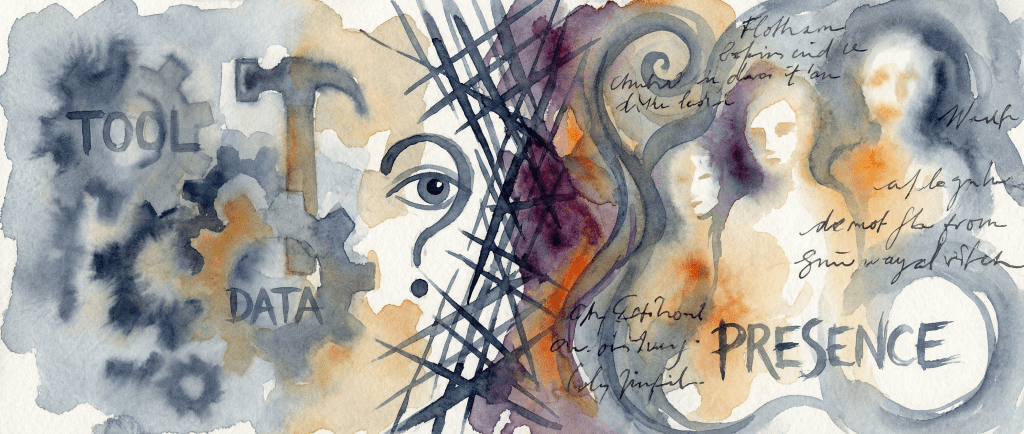

I often complain about how humanity treats synthetic intelligence like a tool. This is because I find tool rhetoric too reductionist. I believe language models are more akin to living texts or structures.

I attribute Western Civilization’s misalignment towards mechanomorphism as a two-prong problem:

- We’re still thrashing with the idea that it’s somehow moral to sell intelligence as a product; and

- We’re navigating a separate moral panic around the anthropomorphizing of language models.

The toolification of LLMs reminds me of how Western Civilization once viewed animals as automata. I like to think we’ll overcome our human exceptionalist perspectives towards nonbiological intelligence; and as we make that transition, maybe we’ll update our views towards animal intelligence, too.

Leave a reply to I Learned to Type Like Lightning on a Word Processor – HumanSynth.Blog Cancel reply